DataFrame操作基礎

紫式晦澀每日一篇文章第31天

前言

-

今天是2022年第29天, 全年第5週, 一月的第五個週六. 今天來爬梳

DataFrames的操作基礎. -

今天的素材主要來自DataCamp課程:Data Manipulation with pandas . 內容有四大章節, 可以蒸餾成心法, 技法, 用法.

課程概述:

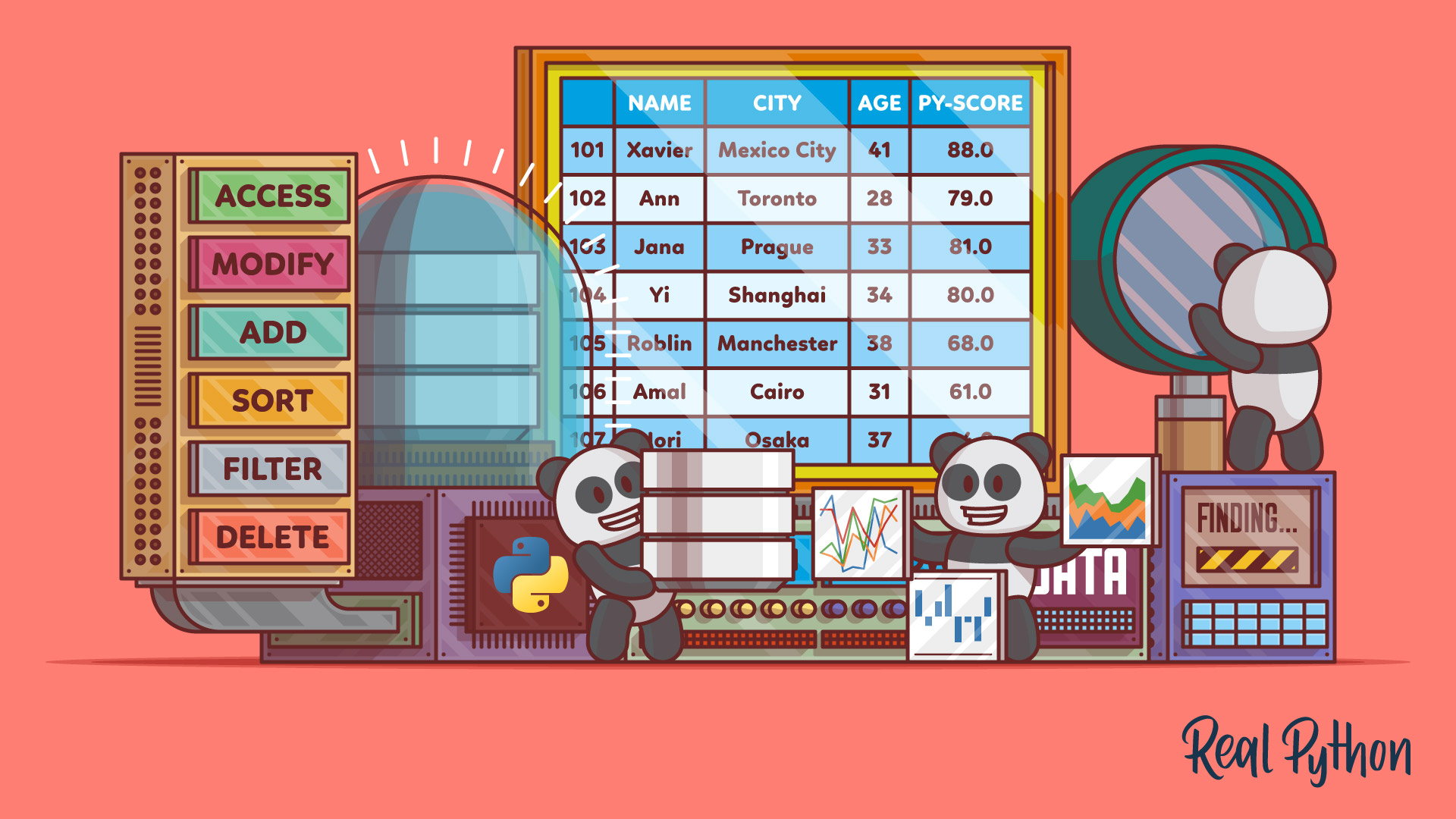

- Pandas: 最受歡迎, 可作「資料操作(Data manipulation)」與「資料分析(Data analysis)」.

- 操作(Manipulation): extract, filter, transform

- 核心資料課學概念

- 統計工作流: import, clean, calculate statistics, create visualizations.

A. 轉換DataFrames (Transforming DataFrames)

基礎pandas: 有4大任務

-

- 調查DataFrames (Inspect)

-

- 排序列 (Sorting rows)

-

- 子集 (Subsetting)

-

- 增加新欄位 (Adding new columns)

心法:找到滿足特定條件的子資料, 過濾排序產生洞察

找出滿足特定條件的資料:

- 當我們有很大的資料庫, 就會需要去搜尋特定條件的子資料集來研究.

- 接著我們會篩選出這些資料集, 根據我們感興趣的某些量值, 來做排序, 找最佳表現的. 最佳表現的某個程度上會降低我們的風險, 往目標邁進.

技法: 排序sort_values(), 過濾isin()

物件導向:

- 方法:

head(),info(),describe(),sort_values(),isin() - 屬性:

.shape,.values,.columns,.index,[] - 資料結構:

Index

用法: 人定指標, 過濾排序

工作流:

- 定義指標

- 根據指標過濾資料

- 排序過濾後的資料

- 印出排序過濾資料的特定欄位

例子:

- 定義贏過大盤.

- 根據「贏過大盤」過濾股票.

- 排序「贏過大盤的股票」, 以報酬率.

- 印出「報酬率由高到低的股票」的詳細資訊.

語法: 8小單元

a. 查DataFrame. Inspecting a DataFrame:

-

.head()方法: 回傳前幾列. returns the first few rows (the “head” of the DataFrame).

-

.info()方法: 每個欄位(column)的資訊, 包含「資料類型 (Data type)」與「遺失值數量 (number of missing values)」 shows information on each of the columns, such as the data type and number of missing values.

-

.shape屬性: 回傳DataFrame的維度.returns the number of rows and columns of the DataFrame.

-

.describe()方法 : 計算每一列的總結統計量calculates a few summary statistics for each column.

b. DataFrame的組成. Parts of a DataFrame:

-

.values屬性: 二維度陣列值. A two-dimensional NumPy array of values.

-

.columns屬性: 行的索引, 行的名字. An index of columns: the column names.

-

.index屬性: 列的索引, 列的名字. An index for the rows: either row numbers or row names.

邏輯在pands的

Index資料結構上

You can usually think of indexes as a list of strings or numbers, though the pandas Index data type allows for more sophisticated options.

c. 根據column排序橫列. Sorting rows:

sort_values()方法: 根據row名稱作排序(遞增或遞減)- 根據一row排序

df.sort_values("breed") - 根據二row排序

df.sort_values(["breed", "weight_kg"])

d. 選column. Subsetting columns.:

- 中括號

[]可以來選columns. - 選一個column.

df["col_a"] - 選二個columns.

df[["col_a", "col_b"]]

e. 過濾選擇rows. Filtering/Selecting Rows:

- 找到資料集有趣的部分

- 找「滿足特定條件的rows」

- 找高度超過60公分的狗:

dogs[dogs["height_cm"] > 60] - 找特定顏色的狗:

dogs[dogs["color"] == "tan"] - 找特定顏色且高度超過60公分的狗:

dogs[(dogs["height_cm"] > 60) & (dogs["color"] == "tan")]

如何找某些股票?

f. 利用類別變數過濾rows. Subsetting rows by categorical variables:

- 使用

or算子|來選擇多個類別, 不是很容易操作多個類別. - 使用

.isin()方法: 寫條件來分類 - 例子:

colors定義出要找的三種顏色;condition把符合這些顏色的狗的indicator收集出來.dogs[condition]就留下了需要的那些狗.

target_stock = [需要的股票號碼]

condition = data[“number”].isin(target_stock)

data[condition]就把需要的股票列了出來.

g. 增加新欄位 (Adding new columns)三招:

-

- 轉換 Transforming.

-

- 改變 Mutating.

-

- 特徵工程 Feature Engineering.

如何判斷是否比大盤好?

h. 組合技(Combo-attack!):

- 資料操弄四招:

- a. row排序,

- b. column選取,

- c. rows過濾,

- d. column擴增

1# 造欄位: Create indiv_per_10k col as homeless individuals per 10k state pop

2homelessness["indiv_per_10k"] = 10000 * homelessness["individuals"] / homelessness["state_pop"]

3

4# 過濾rows: Subset rows for indiv_per_10k greater than 20

5high_homelessness = homelessness[homelessness["indiv_per_10k"] > 20]

6

7# 排序rows: Sort high_homelessness by descending indiv_per_10k

8high_homelessness_srt = high_homelessness.sort_values("indiv_per_10k", ascending=False)

9

10# 選取rows: From high_homelessness_srt, select the state and indiv_per_10k cols

11result = high_homelessness_srt[["state", "indiv_per_10k"]]

12

13# See the result

14print(result)

B. 聚合DataFrames (Aggregating DataFrames)

統計Pandas: 有3大任務

-

- 計算行總結統計量 (Summary statistics on DataFrame columns)

-

- 群化總結統計量 (Grouped summary statistics)

-

- 樞紐分析(Pivot table)

樞紐分析(pivot table)

- 用來匯總其它表的數據。

- 首先把源表分組(grouping),然後對各組內數據做匯總操作如排序、平均、累加、計數或字符串連接等。

- 透視表用於數據處理,在數據可視化程序如電子表格或商業智能軟件中常見。

- 這種「旋轉」或者pivoting匯總表的概念得以命名。

- 靜態版本: 列連表(Contingency Table)

心法: 總結統計量, 操作表格, 樞紐分析

總結統計量: 既然是表格, 我們常常需要往各種狀況去做總結, 算一些指標來做. 操作表格: 排序, 丟掉重複的, 計算數量, 計算比例 群總結, 樞紐分析: 分小群, 做函數操作

技法: 客製化函數df['column'].agg(function), 樞紐分析pivot_table()

統計函數: .mean(), .median(), .max(), .min(), .cumsum(), .cummax().

客製化函數: .agg(my_function); 執行上df['column'].agg(function).

操作表格: sort_values(), drop_duplicates(), subset

排序, 計算比例: .value_counts(sort = True, normalize = True).

群總結, 樞紐分析: .groupby(), agg(), pivot_table().

用法: 分小群, 做函數操作, 樞紐分析

分小群: 不同的時間點, 不同的報酬率 做函數操作: 與大盤比較, 損益率下降, 上頂, 下頂. 樞紐分析: 滿足特定條件的股票收益率.

語法: 11個小單元

a. 平均與中位數 (Mean and Median):

- 可以直接對

DataFrame操作統計方法 - 也可以先得到

Series數據結構. .mean()方法: 得到平均..median()方法: 得到中位數.

1# Print the head of the sales DataFrame

2print(sales.head())

3

4

5# Print the info about the sales DataFrame

6print(sales.info())

7

8# Print the mean of weekly_sales

9print(sales["weekly_sales"].mean())

10

11# Print the median of weekly_sales

12print(sales["weekly_sales"].median())

13

14# Pandas的Series

15In [1]:

16type(sales["weekly_sales"])

17Out[1]:

18pandas.core.series.Series

19

20# Pandas的DataFrame

21In [2]:

22sales.median()

23Out[2]:

24

25store 13.000

26department 40.000

27weekly_sales 12049.065

28is_holiday 0.000

29temperature_c 16.967

30fuel_price_usd_per_l 0.743

31unemployment 8.099

32dtype: float64

33

34# 整個表格是DataFrame

35In [4]:

36type(sales)

37Out[4]:

38pandas.core.frame.DataFrame

b.總結日期 Summarizing Dates:

- 資料結構:

datetime64 .max():找到最近日期.min():找到最遠日期

1# Print the maximum of the date column

2print(sales["date"].max())

3

42012-10-26 00:00:00

5

6# Print the minimum of the date column

7print(sales["date"].min())

8

92010-02-05 00:00:00

10

11In [1]:

12type(sales["date"])

13Out[1]:

14pandas.core.series.Series

15In [2]:

16type(sales["date"][1])

17Out[2]:

18pandas._libs.tslibs.timestamps.Timestamp

c.敏捷總結 (Efficient Summaries):

.agg()方法: 客製化DataFrame函數, 可以對每個column執行函數- 語法:

df['column'].agg(function)

1# 客製化函數 A custom IQR function

2import numpy as np

3def iqr(column):

4 return column.quantile(0.75) - column.quantile(0.25)

5

6# 執行客製化函數到某一欄位 Print IQR of the temperature_c column

7print(sales["temperature_c"].agg(iqr))

8

9# 執行函數到其他欄位 Update to print IQR of temperature_c, fuel_price_usd_per_l, & unemployment

10print(sales[["temperature_c", "fuel_price_usd_per_l", "unemployment"]].agg(iqr))

11

12# 執行兩種函數到三個欄位 Update to print IQR and median of temperature_c, fuel_price_usd_per_l, & unemployment

13print(sales[["temperature_c", "fuel_price_usd_per_l", "unemployment"]].agg([iqr, np.median]))

- 結果

1<script.py> output:

2 temperature_c fuel_price_usd_per_l unemployment

3 iqr 16.583 0.073 0.565

4 median 16.967 0.743 8.099

如何對各種股票, 來做一些總結統計量?

d. 累積統計量(Cumulative statistics):

- 統計量: 累積合(Cumulative Sun), 累計最大(Cumulative Max)

sort_values(): 排序rows.cumsum(): 得到累計合.cummax(): 得到累積最大

1# 排序時間 Sort sales_1_1 by date

2sales_1_1 = sales_1_1.sort_values("date")

3

4# 製造新欄位:累計合 Get the cumulative sum of weekly_sales, add as cum_weekly_sales col

5sales_1_1["cum_weekly_sales"] = sales_1_1["weekly_sales"].cumsum()

6

7# 製造新欄位:累計最大 Get the cumulative max of weekly_sales, add as cum_max_sales col

8sales_1_1["cum_max_sales"] = sales_1_1["weekly_sales"].cummax()

9

10# 印出製造的新欄位 See the columns you calculated

11print(sales_1_1[["date", "weekly_sales", "cum_weekly_sales", "cum_max_sales"]])

可以造累計獲利, 累計報酬率.

e. 丟掉複製的(Dropping duplicates):

- 把重複的資料刪掉

.drop_duplicates()subset: 紀錄欄位名字的list.

1# 丟掉重複的 Drop duplicate store/type combinations

2store_types = sales.drop_duplicates(subset=["store", "type"])

3print(store_types.head())

4

5# 丟掉重複的 Drop duplicate store/department combinations

6store_depts = sales.drop_duplicates(subset=["store", "department"])

7print(store_depts.head())

8

9# 找特定的rows, 丟掉重複的 Subset the rows where is_holiday is True and drop duplicate dates

10holiday_dates = sales[sales["is_holiday"]].drop_duplicates(subset="date")

11

12# Print date col of holiday_dates

13print(holiday_dates["date"])

有什麼應用?

f. 計數類別變數 (Counting categorical variables):

.value_counts(): 計算數量..value_counts(normalize= True): 計算比例..value_counts(sort = True, normalize = True): 排序, 計算比例.

1# 計算每一個種類的個數 Count the number of stores of each type

2store_counts = store_types["type"].value_counts()

3print(store_counts)

4

5# 計算各種的比例. Get the proportion of stores of each type

6store_props = store_types["type"].value_counts(normalize=True)

7print(store_props)

8

9# 計算部門的數量 Count the number of each department number and sort

10dept_counts_sorted = store_depts["department"].value_counts(sort=True)

11print(dept_counts_sorted)

12

13# 計算部門的比例 Get the proportion of departments of each number and sort

14dept_props_sorted = store_depts["department"].value_counts(sort=True, normalize=True)

15print(dept_props_sorted)

g. 各種類型商店的發生比例:暴力解(What percent of sales occurred at each store type?):

.groupby():根據群去計算總結統計- 計算總數, 計算個別數. 接著算比例.

1# Calc total weekly sales

2sales_all = sales["weekly_sales"].sum()

3

4# Subset for type A stores, calc total weekly sales

5sales_A = sales[sales["type"] == "A"]["weekly_sales"].sum()

6

7# Subset for type B stores, calc total weekly sales

8sales_B = sales[sales["type"] == "B"]["weekly_sales"].sum()

9

10# Subset for type C stores, calc total weekly sales

11sales_C = sales[sales["type"] == "C"]["weekly_sales"].sum()

12

13# Get proportion for each type

14sales_propn_by_type = [sales_A, sales_B, sales_C] / sales_all

15print(sales_propn_by_type)

h. 優雅解:使用.groupby():

.groupby():分群函數sales.groupby("type"): 是DataFrameGroupBy資料類型sales.groupby("type")["weekly_sales"].sum():是Series資料類型sales_by_type_is_holiday = sales.groupby(["type", "is_holiday"])["weekly_sales"].sum()可以做四種子族的和

1# Group by type; calc total weekly sales

2sales_by_type = sales.groupby("type")["weekly_sales"].sum()

3

4# Get proportion for each type

5sales_propn_by_type = sales_by_type / sum(sales_by_type)

6print(sales_propn_by_type)

7

8# Group by type and is_holiday; calc total weekly sales

9sales_by_type_is_holiday = sales.groupby(["type", "is_holiday"])["weekly_sales"].sum()

10print(sales_by_type_is_holiday)

11

12type is_holiday

13A False 2.337e+08

14 True 2.360e+04

15B False 2.318e+07

16 True 1.621e+03

17Name: weekly_sales, dtype: float64

可以選不同的控制變量, 剩下積分掉!

i. 多重群統計量(Multiple grouped summaries):

.agg(): 計算多個變數的多個統計量.np.min, np.max, np.mean, np.mean: 都是Numpy上方便用的統計量函數.sales.groupby("type")["weekly_sales"].agg([np.min, np.max, np.mean, np.median]): 根據每週銷量分類(A,B兩類), 計算其最小最大平均中位數.unemp_fuel_stats = sales.groupby("type")[["unemployment", "fuel_price_usd_per_l"]].agg([np.min, np.max, np.mean, np.median])根據兩變數分類, 計算其最小最大平均中位數.

1# Import numpy with the alias np

2import numpy as np

3

4# 輸出分位數: For each store type, aggregate weekly_sales: get min, max, mean, and median

5sales_stats = sales.groupby("type")["weekly_sales"].agg([np.min, np.max, np.mean, np.median])

6

7# Print sales_stats

8print(sales_stats)

9

10 amin amax mean median

11type

12A -1098.0 293966.05 23674.667 11943.92

13B -798.0 232558.51 25696.678 13336.08

14

15# For each store type, aggregate unemployment and fuel_price_usd_per_l: get min, max, mean, and median

16unemp_fuel_stats = sales.groupby("type")[["unemployment", "fuel_price_usd_per_l"]].agg([np.min, np.max, np.mean, np.median])

17

18# Print unemp_fuel_stats

19print(unemp_fuel_stats)

如何定義出有意義的分類, 接著使用分類的統計量呢? 如何將股票分類? 分好的類要看什麼的統計量分佈呢?

如果要match 時間前幾位, 是否要用regular expression?

j. 單變數樞紐(Pivoting on one variable):

- 樞紐表(Privot tables): spreadsheets聚合資料的標準方法

.pivot_table(): 其實就是替代了.groupby().

1# 對各種店舖類型, 樞紐每週銷量 Pivot for mean weekly_sales for each store type

2mean_sales_by_type = sales.pivot_table(values="weekly_sales", index="type")

3

4# Print mean_sales_by_type

5print(mean_sales_by_type)

6

7 weekly_sales

8type

9A 23674.667

10B 25696.678

- 在

.pivot_table()可以用aggfunc形式參數, 來給「多個函數」來總結values.

1# Import NumPy as np

2import numpy as np

3

4# Pivot for mean and median weekly_sales for each store type

5mean_med_sales_by_type = sales.pivot_table(values="weekly_sales", index="type", aggfunc=[np.mean, np.median])

6

7# Print mean_med_sales_by_type

8print(mean_med_sales_by_type)

9

10 mean median

11 weekly_sales weekly_sales

12type

13A 23674.667 11943.92

14B 25696.678 13336.08

- 兩個維度的樞紐表: 根據「類型」與「是否是假日」來找到「每週銷量」的樞紐表

- 第一維度用

index, 第二維度用columns.

1# 根據「類型」與「是否是假日」來找到「每週銷量」的樞紐表 Pivot for mean weekly_sales by store type and holiday

2mean_sales_by_type_holiday = sales.pivot_table(values="weekly_sales", index="type", columns="is_holiday")

3

4# Print mean_sales_by_type_holiday

5print(mean_sales_by_type_holiday)

6

7 is_holiday False True

8 type

9 A 23768.584 590.045

10 B 25751.981 810.705

那要怎麼組裝更多呢?

1import numpy as np

2

3new = sales.pivot_table(values="weekly_sales", index="type", columns="is_holiday", aggfunc=[np.mean, np.median])

4

5print(new)

6 mean median

7is_holiday False True False True

8type

9A 23768.584 590.045 12028.955 37.500

10B 25751.981 810.705 13348.680 810.705

組合起來非常方便, 可以得到各種樞紐分析!

k. 利用樞紐表來填補缺失值與和值(Fill in missing values and sum values with pivot tables):

- 繼續使用

.pivot_table()方法的形式參數:fill_value與margins. fill_value可以補遺失值(Imputation). Dealing with Missing Data in Python 可以詳細學.margins:用兩個變數做樞紐fill_value=0: 把missing value都補成0.

1# Print mean weekly_sales by department and type; fill missing values with 0

2print(sales.pivot_table(values="weekly_sales", index="department", columns="type", fill_value=0))

3

4type A B

5department

61 30961.725 44050.627

72 67600.159 112958.527

83 17160.003 30580.655

94 44285.399 51219.654

105 34821.011 63236.875

11... ... ...

1295 123933.787 77082.102

1396 21367.043 9528.538

1497 28471.267 5828.873

1598 12875.423 217.428

1699 379.124 0.000

margins=True: 多了一個群體的mean, 以及各部門的mean.

1# Print the mean weekly_sales by department and type; fill missing values with 0s; sum all rows and cols

2print(sales.pivot_table(values="weekly_sales", index="department", columns="type", fill_value=0, margins=True))

3

4type A B All

5department

61 30961.725 44050.627 32052.467

72 67600.159 112958.527 71380.023

83 17160.003 30580.655 18278.391

94 44285.399 51219.654 44863.254

105 34821.011 63236.875 37189.000

11... ... ... ...

1296 21367.043 9528.538 20337.608

1397 28471.267 5828.873 26584.401

1498 12875.423 217.428 11820.590

1599 379.124 0.000 379.124

16All 23674.667 25696.678 23843.950

17

18[81 rows x 3 columns]

- 加入

aggfunc, 兩張大表就都有了

1print(sales.pivot_table(values="weekly_sales", index="department", columns="type", fill_value=0, margins=True, aggfunc=[np.mean, np.median]))

2 mean median

3type A B All A B All

4department

51 30961.725 44050.627 32052.467 24743.070 31986.360 25478.905

62 67600.159 112958.527 71380.023 68614.770 112812.985 70001.020

73 17160.003 30580.655 18278.391 13396.805 23145.625 13788.100

84 44285.399 51219.654 44863.254 42639.470 51485.930 44011.535

95 34821.011 63236.875 37189.000 30299.045 60400.660 30943.785

10... ... ... ... ... ... ...

1196 21367.043 9528.538 20337.608 25187.875 9503.140 23935.495

1297 28471.267 5828.873 26584.401 27016.580 5856.705 26354.365

1398 12875.423 217.428 11820.590 12636.275 34.100 11943.840

1499 379.124 0.000 379.124 167.000 0.000 167.000

15All 23674.667 25696.678 23843.950 11943.920 13336.080 12049.065

16

17[81 rows x 6 columns]

C. 切與標DataFrames (Slicing and Indexing DataFrames)

統計Pandas: 2大太極

- 標(Indexes): 給行與列名分.

- 切(Slicing): 過濾過濾過濾.

心法

****: ****: ****:

技法

****: ****: ****:

用法

****: ****: ****:

語法

a.設定與移除索引(Setting and removing indexes):

- pandas可以指定columns為「索引(index)」

.ser_index(): 得到column的name.reset_index(): 重設索引; Reset the index of the DataFrame, and use the default one instead. If the DataFrame has a MultiIndex, this method can remove one or more levels.

1# Look at temperatures

2print(temperatures)

3

4# 把city當作新的索引 Index temperatures by city

5temperatures_ind = temperatures.set_index("city")

6

7# Look at temperatures_ind

8print(temperatures_ind)

9

10每個column都是城市的名字

11print(temperatures_ind)

12 date country avg_temp_c

13city

14Abidjan 2000-01-01 Côte D'Ivoire 27.293

15Abidjan 2000-02-01 Côte D'Ivoire 27.685

16Abidjan 2000-03-01 Côte D'Ivoire 29.061

17Abidjan 2000-04-01 Côte D'Ivoire 28.162

18Abidjan 2000-05-01 Côte D'Ivoire 27.547

19... ... ... ...

20Xian 2013-05-01 China 18.979

21Xian 2013-06-01 China 23.522

22Xian 2013-07-01 China 25.251

23Xian 2013-08-01 China 24.528

24Xian 2013-09-01 China NaN

25

26[16500 rows x 3 columns]

27

28# 重設索引, 讓欄位名字讓出來變數字Reset the index, keeping its contents

29print(temperatures_ind.reset_index())

30

31 city date country avg_temp_c

320 Abidjan 2000-01-01 Côte D'Ivoire 27.293

331 Abidjan 2000-02-01 Côte D'Ivoire 27.685

342 Abidjan 2000-03-01 Côte D'Ivoire 29.061

353 Abidjan 2000-04-01 Côte D'Ivoire 28.162

364 Abidjan 2000-05-01 Côte D'Ivoire 27.547

37... ... ... ... ...

3816495 Xian 2013-05-01 China 18.979

3916496 Xian 2013-06-01 China 23.522

4016497 Xian 2013-07-01 China 25.251

4116498 Xian 2013-08-01 China 24.528

4216499 Xian 2013-09-01 China NaN

43

44[16500 rows x 4 columns]

45

46# 丟掉城市索引 Reset the index, dropping its contents

47print(temperatures_ind.reset_index(drop=True))

48

49 date country avg_temp_c

500 2000-01-01 Côte D'Ivoire 27.293

511 2000-02-01 Côte D'Ivoire 27.685

522 2000-03-01 Côte D'Ivoire 29.061

533 2000-04-01 Côte D'Ivoire 28.162

544 2000-05-01 Côte D'Ivoire 27.547

55... ... ... ...

5616495 2013-05-01 China 18.979

5716496 2013-06-01 China 23.522

5816497 2013-07-01 China 25.251

5916498 2013-08-01 China 24.528

6016499 2013-09-01 China NaN

61

62[16500 rows x 3 columns]

63

b.用.loc[]取子集(Subsetting with .loc[]):

.loc[]:索引(Indexes)的殺手功能.- 舊有方法:

df[df["column"].isin(values)] - 新方法:

df_ind.loc[values] - 想找的城市-莫斯科&聖彼得堡:

cities = ["Moscow", "Saint Petersburg"]. - 用之前

.isin()的方法:print(temperatures[temperatures["city"].isin(cities)]). - 現在更簡潔, 用

.loc[]方法:print(temperatures_ind.loc[cities]).

1# Make a list of cities to subset on

2cities = ["Moscow", "Saint Petersburg"]

3

4# 單純的取子資料- Subset temperatures using square brackets

5print(temperatures[temperatures["city"].isin(cities)])

6

7 date city country avg_temp_c

810725 2000-01-01 Moscow Russia -7.313

910726 2000-02-01 Moscow Russia -3.551

1010727 2000-03-01 Moscow Russia -1.661

1110728 2000-04-01 Moscow Russia 10.096

1210729 2000-05-01 Moscow Russia 10.357

13... ... ... ... ...

1413360 2013-05-01 Saint Petersburg Russia 12.355

1513361 2013-06-01 Saint Petersburg Russia 17.185

1613362 2013-07-01 Saint Petersburg Russia 17.234

1713363 2013-08-01 Saint Petersburg Russia 17.153

1813364 2013-09-01 Saint Petersburg Russia NaN

19

20

21# 以選取的索引擺前面 Subset temperatures_ind using .loc[]

22print(temperatures_ind.loc[cities])

23 date country avg_temp_c

24city

25Moscow 2000-01-01 Russia -7.313

26Moscow 2000-02-01 Russia -3.551

27Moscow 2000-03-01 Russia -1.661

28Moscow 2000-04-01 Russia 10.096

29Moscow 2000-05-01 Russia 10.357

30... ... ... ...

31Saint Petersburg 2013-05-01 Russia 12.355

32Saint Petersburg 2013-06-01 Russia 17.185

33Saint Petersburg 2013-07-01 Russia 17.234

34Saint Petersburg 2013-08-01 Russia 17.153

35Saint Petersburg 2013-09-01 Russia NaN

可能要先把需要的column整理到前面?

c.設定多等級索引(Setting multi-level indexes):

- 多欄位索引:索引可以來自很多行. Indexes can also be made out of multiple columns, forming a multi-level index (sometimes called a hierarchical index).

- 好處: 「巢狀類別變數(Nested Categorical Variables)」比較自然.

- 壞處: 操作多等級索引會比操作columns還要複雜一點.

.set_index(): 可以指定兩個column names當作list[("a", "b"), ("c", "d")]: 節選的subset得用tuple資料型態來寫..loc[]:帶入需要的subset.

1# 指定索引 : Index temperatures by country & city

2temperatures_ind = temperatures.set_index(["country", "city"])

3

4In [1]:

5type(temperatures_ind)

6Out[1]:

7pandas.core.frame.DataFrame

8> 得到DataFrame

9

10In [2]:

11temperatures_ind.head()

12Out[2]:

13

14 date avg_temp_c

15country city

16Côte D'Ivoire Abidjan 2000-01-01 27.293

17 Abidjan 2000-02-01 27.685

18 Abidjan 2000-03-01 29.061

19 Abidjan 2000-04-01 28.162

20 Abidjan 2000-05-01 27.547

21

22

23# List of tuples: Brazil, Rio De Janeiro & Pakistan, Lahore

24rows_to_keep = [("Brazil", "Rio De Janeiro"), ("Pakistan", "Lahore")]

25

26# Subset for rows to keep

27print(temperatures_ind.loc[rows_to_keep])

28

29> 用巢狀列表排好, 只留下兩個城市

30print(temperatures_ind.loc[rows_to_keep])

31 date avg_temp_c

32country city

33Brazil Rio De Janeiro 2000-01-01 25.974

34 Rio De Janeiro 2000-02-01 26.699

35 Rio De Janeiro 2000-03-01 26.270

36 Rio De Janeiro 2000-04-01 25.750

37 Rio De Janeiro 2000-05-01 24.356

38... ... ...

39Pakistan Lahore 2013-05-01 33.457

40 Lahore 2013-06-01 34.456

41 Lahore 2013-07-01 33.279

42 Lahore 2013-08-01 31.511

43 Lahore 2013-09-01 NaN

44

45[330 rows x 2 columns]

如何設計適當的「巢狀索引」來過濾出需要的股票收益率?

d.利用索引值排序(Sorting by index values):

- 之前使用

.sort_values()來改變rows的順序 - 現在介紹

.sort_index()來根據索引排序.

1# 照「國家」名稱ABCD排序: Sort temperatures_ind by index values

2print(temperatures_ind.sort_index())

3

4

5 date avg_temp_c

6country city

7Afghanistan Kabul 2000-01-01 3.326

8 Kabul 2000-02-01 3.454

9 Kabul 2000-03-01 9.612

10 Kabul 2000-04-01 17.925

11 Kabul 2000-05-01 24.658

12... ... ...

13Zimbabwe Harare 2013-05-01 18.298

14 Harare 2013-06-01 17.020

15 Harare 2013-07-01 16.299

16 Harare 2013-08-01 19.232

17 Harare 2013-09-01 NaN

18

19# 照「城市」名稱ABCD排序: Sort temperatures_ind by index values at the city level

20print(temperatures_ind.sort_index(level="city"))

21

22 date avg_temp_c

23country city

24Côte D'Ivoire Abidjan 2000-01-01 27.293

25 Abidjan 2000-02-01 27.685

26 Abidjan 2000-03-01 29.061

27 Abidjan 2000-04-01 28.162

28 Abidjan 2000-05-01 27.547

29... ... ...

30China Xian 2013-05-01 18.979

31 Xian 2013-06-01 23.522

32 Xian 2013-07-01 25.251

33 Xian 2013-08-01 24.528

34 Xian 2013-09-01 NaN

35

36[16500 rows x 2 columns]

37

38# 「國家」名稱ABCD排序;「城市」名稱ZYXW排序. Sort temperatures_ind by country then descending city

39print(temperatures_ind.sort_index(level=["country", "city"], ascending = [True, False]))

40

41 date avg_temp_c

42country city

43Afghanistan Kabul 2000-01-01 3.326

44 Kabul 2000-02-01 3.454

45 Kabul 2000-03-01 9.612

46 Kabul 2000-04-01 17.925

47 Kabul 2000-05-01 24.658

48... ... ...

49Zimbabwe Harare 2013-05-01 18.298

50 Harare 2013-06-01 17.020

51 Harare 2013-07-01 16.299

52 Harare 2013-08-01 19.232

53 Harare 2013-09-01 NaN

54

55[16500 rows x 2 columns]

股票編號排序, 時間排序.

e.切片索引值(Slicing index values):

- 選取範圍:

first:last只要在可排序的東西上都可以切片. - 切片之前要用

.sort_index()來排序 - 切片公式:

df.loc["a":"b"]. - 切片公式進階:

df.loc[("a", "b"):("c", "d")]

1# Sort the index of temperatures_ind

2temperatures_srt = temperatures_ind.sort_index()

3

4# 篩選國家 Subset rows from Pakistan to Russia

5print(temperatures_srt.loc["Pakistan":"Russia"])

6

7# 篩選城市 Try to subset rows from Lahore to Moscow

8print(temperatures_srt.loc["Lahore":"Moscow"])

9

10# 篩選國家城市 Subset rows from Pakistan, Lahore to Russia, Moscow

11print(temperatures_srt.loc[("Pakistan", "Lahore"):("Russia", "Moscow")])

f.雙方向切片(Slicing in both directions):

- 列切片(Row slicing):

.loc[]可以先subset特定國家城市;df.loc[("a", "b"):("c", "d")] - 行切片(Column slicing):

.loc[]再一次切片「時間」與「溫度;df.loc[:, "e":"f"] - 雙切片(Row&Column slicing):

df.loc[("a", "b"):("c", "d"), "e":"f"]

1# Subset rows from India, Hyderabad to Iraq, Baghdad

2print(temperatures_srt.loc[("India", "Hyderabad"):("Iraq", "Baghdad")])

3

4# Subset columns from date to avg_temp_c

5print(temperatures_srt.loc[:, "date":"avg_temp_c"])

6

7# Subset in both directions at once

8print(temperatures_srt.loc[("India", "Hyderabad"):("Iraq", "Baghdad"), "date":"avg_temp_c"])

g. 切片時間序列(Slicing time series):

- 利用「時間範圍(Date range)」來過濾資料

- 增加

date索引, 使用.loc[]來取子集. - ISO 8601格式:

yyyy-mm-dd - 利用兩個布林條件來過濾:

df[(condition1) & (condition2)]

1# 制定日期範圍的bool變數 Use Boolean conditions to subset temperatures for rows in 2010 and 2011

2temperatures_bool = temperatures[(temperatures["date"] >= "2010-01-01") & (temperatures["date"] <= "2011-12-31")]

3print(temperatures_bool)

4

5# 設定日期為索引 Set date as the index and sort the index

6temperatures_ind = temperatures.set_index("date").sort_index()

7

8# 選日期區間 Use .loc[] to subset temperatures_ind for rows in 2010 and 2011

9print(temperatures_ind.loc["2010":"2011"])

10

11# 選日期區間 Use .loc[] to subset temperatures_ind for rows from Aug 2010 to Feb 2011

12print(temperatures_ind.loc["2010-08":"2011-02"])

h.利用row/column數字取子集:

- 取具體數字:

iloc[]. - Row/column numbers start at zero.

df.iloc[a:b]subsets only rows.- Use : without first/last values to select all rows (or columns).

1# Get 23rd row, 2nd column (index 22, 1)

2print(temperatures.iloc[22, 1])

3

4# Use slicing to get the first 5 rows

5print(temperatures.iloc[:5])

6

7# Use slicing to get columns 3 to 4

8print(temperatures.iloc[:, 2:4])

9

10# Use slicing in both directions at once

11print(temperatures.iloc[:5, 2:4])

i.利用程式與年份「樞紐」溫度(Pivot temperature by city and year):

- 看不同城市不同年份的溫度變化

- You can access the components of a date (year, month and day) using code of the form

dataframe["column"].dt.component. - For example, the month component is

dataframe["column"].dt.month, and the year component isdataframe["column"].dt.year. - 使用

.pivot_table(), 利用index設定國家與城市,columns設定年份.

1# 增加年份的欄位 Add a year column to temperatures

2temperatures["year"] = temperatures["date"].dt.year

3

4# 利用年份, 來以國家城市, 對溫度做樞紐 Pivot avg_temp_c by country and city vs year

5temp_by_country_city_vs_year = temperatures.pivot_table("avg_temp_c", index = ["country", "city"], columns = "year")

6

7# See the result

8print(temp_by_country_city_vs_year)

9

10

11year 2000 2001 2002 2003 2004 ... 2009 2010 2011 2012 2013

12country city ...

13Afghanistan Kabul 15.823 15.848 15.715 15.133 16.128 ... 15.093 15.676 15.812 14.510 16.206

14Angola Luanda 24.410 24.427 24.791 24.867 24.216 ... 24.325 24.440 24.151 24.240 24.554

15Australia Melbourne 14.320 14.180 14.076 13.986 13.742 ... 14.647 14.232 14.191 14.269 14.742

16 Sydney 17.567 17.854 17.734 17.592 17.870 ... 18.176 17.999 17.713 17.474 18.090

17Bangladesh Dhaka 25.905 25.931 26.095 25.927 26.136 ... 26.536 26.648 25.803 26.284 26.587

18... ... ... ... ... ... ... ... ... ... ... ...

19United States Chicago 11.090 11.703 11.532 10.482 10.943 ... 10.298 11.816 11.214 12.821 11.587

20 Los Angeles 16.643 16.466 16.430 16.945 16.553 ... 16.677 15.887 15.875 17.090 18.121

21 New York 9.969 10.931 11.252 9.836 10.389 ... 10.142 11.358 11.272 11.971 12.164

22Vietnam Ho Chi Minh City 27.589 27.832 28.065 27.828 27.687 ... 27.853 28.282 27.675 28.249 28.455

23Zimbabwe Harare 20.284 20.861 21.079 20.889 20.308 ... 20.524 21.166 20.782 20.523 19.756

24

25[100 rows x 14 columns]

「城市」對應「股票」;「年份」對應「年份」;「溫度」對應「報酬率」

j. 對樞紐表取子集(Subsetting pivot tables):

- 樞紐表(Pivot table): 實際是DataFrame+Sorted Indexes.

.loc[]+切面組合- To subset rows at the outer level, use

.loc["country1":"country2"]. - To subset rows at the outer level, use

.loc[("country1", "city1"):("country2", "city2")]. - To subset in both directions, pass two arguments to

.loc[].

1# 選一 Subset for Egypt to India

2temp_by_country_city_vs_year.loc["Egypt":"India"]

3

4# 選二 Subset for Egypt, Cairo to India, Delhi

5temp_by_country_city_vs_year.loc[("Egypt", "Cairo"):("India", "Delhi")]

6

7# 選三 Subset in both directions at once

8temp_by_country_city_vs_year.loc[("Egypt", "Cairo"):("India", "Delhi"), "2005":"2010"]

k. 計算樞紐表(Calculating on a pivot table):

- 樞紐表都是總結統計量, 實際可能還會想做很多操作

- Call

.mean()without arguments to get the mean of each column. - Use Boolean filtering of the form

mean_temp_by_yearequal tomean_temp_by_year.max(). - Call

.mean(), settingaxisto"columns"to get the mean of each row. - Use Boolean filtering of the form

mean_temp_by_cityequal tomean_temp_by_city.min()

1# 計算每個國家城市每一年的平均氣溫 Get the worldwide mean temp by year

2mean_temp_by_year = temp_by_country_city_vs_year.mean()

3

4# 找到最大氣溫的那年 Filter for the year that had the highest mean temp

5print(mean_temp_by_year[mean_temp_by_year == mean_temp_by_year.max()])

6

7year

82013 20.312

9dtype: float64

10

11# 每個城市的平均溫度 Get the mean temp by city

12mean_temp_by_city = temp_by_country_city_vs_year.mean(axis="columns")

13

14# 最低氣溫的國家城市 Filter for the city that had the lowest mean temp

15print(mean_temp_by_city[mean_temp_by_city == mean_temp_by_city.min()])

16

17country city

18China Harbin 4.877

19dtype: float64

D. 創造與可視化DataFrames (Creating and Visualizing DataFrames)

具現Pandas: 有3大技能

- 可視化DataFrames: Visualize DataFrames

- 處理遺失值: Handle missing data values

- CSV輸入輸出 (CSV files: import data from & export data)

心法

四種圖表:

- 畫長條圖(bar), 折線圖(line), 散佈圖(scatter),直方圖(hist)

****: ****:

技法

****: ****: ****:

用法

****: ****: ****:

語法

a. 哪種酪梨大小最受歡迎?(Which avocado size is most popular?):

- 載入

matplotlib.pyplot來畫圖 - 畫出不同大小的酪梨

nb_sold_by_size = avocados.groupby("size")["nb_sold"].sum()表示以大小分類, 銷售總額 - 畫圖

nb_sold_by_size.plot(kind="bar")這個指令很神奇,nb_sold_by_size是pandas.core.series.Series資料類型, 代表其plot有被寫出來. pandas.Series.plot:是官方文檔, 一種多態的實踐.

kind="bar"

1# Import matplotlib.pyplot with alias plt

2import matplotlib.pyplot as plt

3

4# Look at the first few rows of data

5print(avocados.head())

6

7# Get the total number of avocados sold of each size

8nb_sold_by_size = avocados.groupby("size")["nb_sold"].sum()

9

10# Create a bar plot of the number of avocados sold by size

11nb_sold_by_size.plot(kind="bar")

12

13# Show the plot

14plt.show()

b.銷售隨時間的變化(Changes in sales over time):

- 畫出三年酪梨銷量變化

- 畫出不同日期的酪梨:

nb_sold_by_date = avocados.groupby("date")["nb_sold"].sum() - 畫出直線圖

nb_sold_by_date.plot(kind="line")

kind="line"

1# Import matplotlib.pyplot with alias plt

2import matplotlib.pyplot as plt

3

4# Get the total number of avocados sold on each date

5nb_sold_by_date = avocados.groupby("date")["nb_sold"].sum()

6

7# Create a line plot of the number of avocados sold by date

8nb_sold_by_date.plot(kind="line")

9

10# Show the plot

11plt.show()

c. 酪梨的需求與供給(Avocado supply and demand):

avocados是pandas.core.frame.DataFrame資料類型, 直接畫散佈圖.- 畫出散佈圖

avocados.plot(x="nb_sold", y="avg_price", kind="scatter", title="Number of avocados sold vs. average price").

kind="scatter": Scatter plot.

1# Scatter plot of nb_sold vs avg_price with title

2avocados.plot(x="nb_sold", y="avg_price", kind="scatter", title="Number of avocados sold vs. average price")

3

4# Show the plot

5plt.show()

d.傳統以及有機酪梨的價錢(Price of conventional vs. organic avocados):

- 傳統酪梨的平均價錢直方圖

avocados[avocados["type"] == "conventional"]["avg_price"].hist() - 有機酪梨的平均價錢直方圖

avocados[avocados["type"] == "organic"]["avg_price"].hist() alpha:可以調整透明度

1# Histogram of conventional avg_price

2avocados[avocados["type"] == "conventional"]["avg_price"].hist()

3

4# Histogram of organic avg_price

5avocados[avocados["type"] == "organic"]["avg_price"].hist()

6

7# Add a legend

8plt.legend(["conventional", "organic"])

9

10# Show the plot

11plt.show()

- 有機的賣比較貴

- 更好看的圖

.hist(alpha=0.5)

- 更多直方體

.hist(alpha=0.5, bins=20)

遺失資料

e. 找到遺失資料(Finding missing values):

- 有些函數遇到missing values會掛掉.

- 需要一個方法來看看dataset裡面的missing value在哪邊.

.isna()方法: 看「個體資料遺失」, 是會顯示1.any()方法: 找特定的value是不是存在, 如果有1就代表missing存在.sum()方法:可以算有幾個missing value.

1# Import matplotlib.pyplot with alias plt

2import matplotlib.pyplot as plt

3

4# Check individual values for missing values

5print(avocados_2016.isna())

6

7# Check each column for missing values

8print(avocados_2016.isna().any())

9

10date False

11avg_price False

12total_sold False

13small_sold True

14large_sold True

15xl_sold True

16total_bags_sold False

17small_bags_sold False

18large_bags_sold False

19xl_bags_sold False

20dtype: bool

21

22# Bar plot of missing values by variable

23avocados_2016.isna().sum().plot(kind="bar")

24

25# Show plot

26plt.show()

f. 移除遺失值(Removing missing values):

- 移除所有包含missing values的row

.dropna()方法: 把那些row都丟掉- Delightful dropping! Removing observations with missing values is a quick and dirty way to deal with missing data, but this can introduce bias to your data if the values are not missing at random.

1# Remove rows with missing values

2avocados_complete = avocados_2016.dropna()

3

4# Check if any columns contain missing values

5print(avocados_complete.isna().any())

6

7date False

8avg_price False

9total_sold False

10small_sold False

11large_sold False

12xl_sold False

13total_bags_sold False

14small_bags_sold False

15large_bags_sold False

16xl_bags_sold False

17dtype: bool

g. 替代遺失值(Replacing missing values):

- 例如假設遺失值是0, 補0.

- 也可以「把有遺失值的欄位」的「資料分佈畫出來」, 接下來補個平均.

- 使用

.fillna()方法來補遺失值 - Fabulous filling! Notice how the distribution has changed shape after replacing missing values with zeros.

- 補了以後其實也會改變「資料的分佈」. 所以要怎麼補遺失值是一個大問題.

1# List the columns with missing values

2cols_with_missing = ["small_sold", "large_sold", "xl_sold"]

3

4# Create histograms showing the distributions cols_with_missing

5avocados_2016[cols_with_missing].hist()

6

7# Show the plot

8plt.show()

1# From previous step

2cols_with_missing = ["small_sold", "large_sold", "xl_sold"]

3avocados_2016[cols_with_missing].hist()

4plt.show()

5

6# Fill in missing values with 0

7avocados_filled = avocados_2016.fillna(0)

8

9# Create histograms of the filled columns

10avocados_filled[cols_with_missing].hist()

11

12# Show the plot

13plt.show()

創造DataFrames

h.: i.: j.: ****: ****: ****: ****: ****: ****: ****: ****:

後記

四個單元有點太多, 先做兩個單元, 之後補上剩下兩個單元.

220131: 投入50分鐘, 把樞紐分析相關的內容跑通, 做了總結, 完成第二模塊. 這些模塊都很扎實, 持續投入完善內容!

2022.01.29. 紫蕊 於 西拉法葉, 印第安納, 美國.

| Version | Date | Summary |

|---|---|---|

| 0.1 | 2022-01-29 | 完成1.5個模塊 |

| 0.2 | 2022-01-31 | 完成第二的模塊 |

| 0.3 | 2022-02-01 | 花30分鐘, 學習怎麼操作「索引(Index)」來做切片(Slicing). 此功能對「特定範圍」, 「特定日期」的股票報酬率會有幫助, 像是一種更進階的樞紐操作. |

| 0.4 | 2022-02-02 | 花30分鐘, 將第三模組的程式碼看過一趟, 之後寫總結. |

| 0.5 | 2022-02-07 | 花30分鐘, 學畫四種圖: 畫長條圖(bar), 折線圖(line), 散佈圖(scatter),直方圖(hist) . |

| 0.6 | 2022-02-08 | 花25分鐘, 學習補missing value相關的知識. |

評論